In one of my recent posts I demonstrated how to implement a simple WCF service using Visual Studio 2008. This time I’ll look at how to implement the client. In doing so I’ll highlight an important oversight of quite a few examples on the internet include at least one provided my Microsoft (see references)!

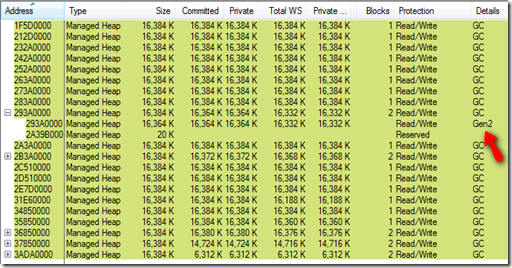

I’ll continue where I left the solution last time:

I’ll add a service client next. Many different options are possible, a Forms-base native application, a WPF-based client, an ASP.NET site, command line, and many more. For this example I’ll use an ASP.NET front-end.

Creating the ASP.NET Application

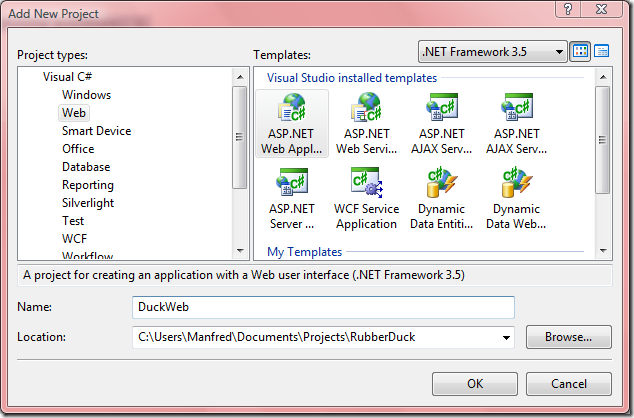

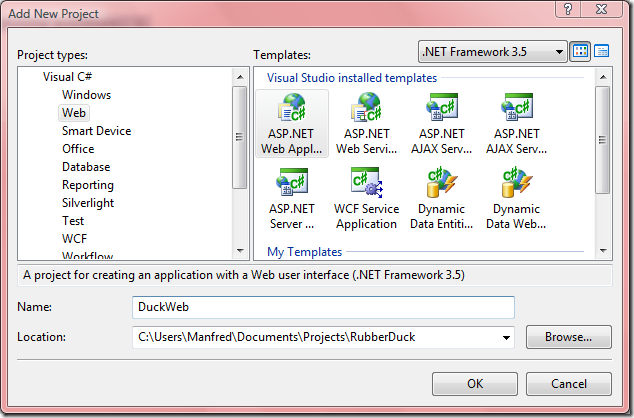

I’ll use add a new project to the solution. Here is the recipe:

- Select from the solution context menu “Add”, then “New Project…”

- In the tree on the left expand the “Visual C#” node, then click “Web”

- On the right select “ASP.NET Web Application”

- As a name enter “DuckWeb”.

Here is how it should look like just before you click “OK”:

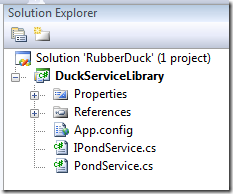

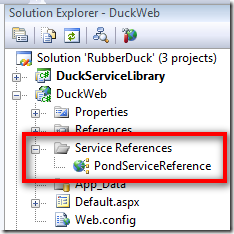

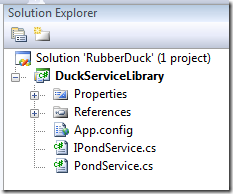

Click OK and your solution should now look like this (I collapsed the DuckServiceLibrary to save some space):

Click OK and your solution should now look like this (I collapsed the DuckServiceLibrary to save some space):

Open the file Default.aspx in source mode and add a label, a text box and a button as follows:

Open the file Default.aspx in source mode and add a label, a text box and a button as follows:

1 <%@ Page Language="C#" AutoEventWireup="true"

2 CodeBehind="Default.aspx.cs" Inherits="DuckWeb._Default" %>

3 <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN"

4 "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

5 <html xmlns="http://www.w3.org/1999/xhtml" >

6 <head runat="server">

7 <title></title>

8 </head>

9 <body>

10 <form id="form1" runat="server">

11 <div>

12 <asp:Label ID="_pondNameLabel" runat="server" Text="Pond Name:">

13 </asp:Label>

14 <asp:TextBox ID="_pondNameTextBox" runat="server"></asp:TextBox>

15 <asp:Button ID="_submitButton" runat="server" Text="Submit"

16 onclick="_submitButton_Click" />

17 <br />

18 <br />

19 <asp:Label ID="_pondStatusMessage" runat="server" Text="Status unknown.">

20 </asp:Label>

21 </div>

22 </form>

23 </body>

24 </html>

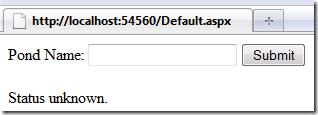

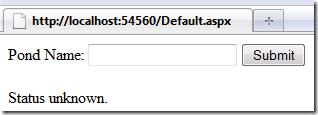

When you view this in a browser it looks like this:

It doesn’t do anything yet, however. Next I’ll add an event handler to clicking the button. In the Design view of Visual Studio for Default.aspx I simply double-click the button which gives me the following handler:

14 protected void _submitButton_Click(object sender, EventArgs e) {

15

16 }

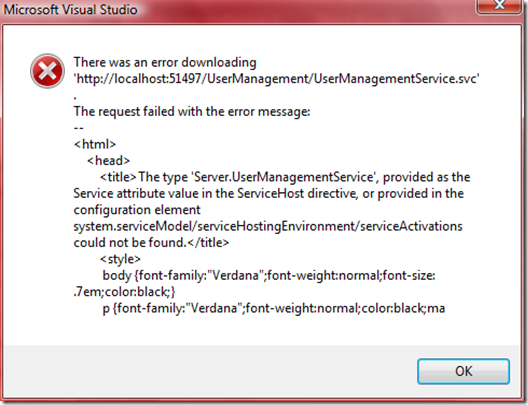

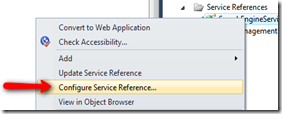

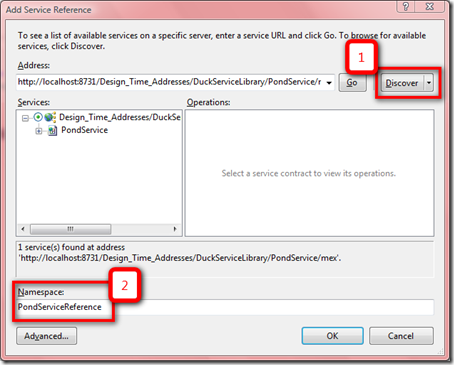

In this I’ll add the the code for the service. However, in order to consume the service the web application needs a reference to it. That’s easy to do by doing a right mouse click in the solution explorer on the project “DuckWeb” and then selecting “Add Service Reference…”:

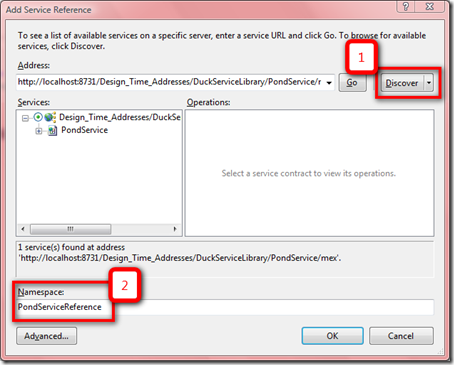

In the “Add Service Reference” dialog I click the “Discover” button and then enter as namespace at the bottom “PondServiceReference”:

In the “Add Service Reference” dialog I click the “Discover” button and then enter as namespace at the bottom “PondServiceReference”:

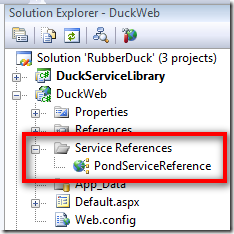

Upon clicking “OK” the service reference will be added and I can start using the service as if it was just another .NET library.

Let’s return to the _submitButton_Click() event handler. Since I now have the service reference available I can write my first implementation:

Let’s return to the _submitButton_Click() event handler. Since I now have the service reference available I can write my first implementation:

1 using System;

2 using System.Web.UI;

3

4 using DuckWeb.PondServiceReference;

5

6 namespace DuckWeb {

7 public partial class _Default : Page {

8 protected void Page_Load(object sender, EventArgs e) {

9

10 }

11

12 protected void _submitButton_Click(object sender, EventArgs e) {

13 var client = new PondServiceClient();

14

15 _pondStatusMessage.Text = _pondNameTextBox.Text +

16 (client.IsFrozen(_pondNameTextBox.Text) ?

17 " is frozen." : " free of ice.");

18 }

19 }

20 }

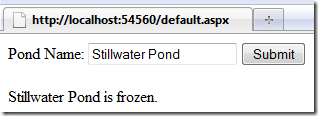

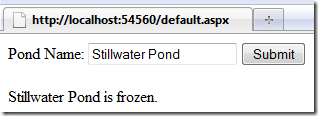

Note that I added a using statement for the namespace that contains the generated classes for the service reference. Please also note that this code is far from production ready but I’ll come back to this shortly. First let’s see whether this already works as expected. After setting the DuckWeb project as the startup project the browser is launched and I give it a test run:

Just what I wanted! So all good, right? Not quite! Return to the code where I create the PondServiceClient instance:

Just what I wanted! So all good, right? Not quite! Return to the code where I create the PondServiceClient instance:

13 var client = new PondServiceClient();

In this line I also create a connection to the service. However, nowhere in the remainder of the code do I close this connection. Of course at some point the instance will be subject to garbage collection and of course the connection will eventually be closed. However, if I rely on this mechanism I have very little control over when that happens. In this simple example it may not be a big issue. However, if the service implementation uses other resources per connection, e.g. a database connection, or if the service is configured to service only a certain number of open connections, e.g. 10, then your system will get into trouble very quickly. Unfortunately some examples on MSDN or similar sites don’t close WCF connections either (see references below), so chances are that we’ll see service clients that won’t be implemented correctly.

Therefore let’s add a line that closes the connection:

13 protected void _submitButton_Click(object sender, EventArgs e) {

14 var client = new PondServiceClient();

15

16 _pondStatusMessage.Text = _pondNameTextBox.Text +

17 (client.IsFrozen(_pondNameTextBox.Text)

18 ?

19 " is frozen."

20 : " free of ice.");

21 client.Close();

22 }

Now this looks already better. But it is still not good enough!

What if we cannot connect to the service for whatever reason? If that is the case the generated code will throw different types of exceptions depending on the error. The recommended way (or canonical way if you like) of handling the exception looks as follows:

23 protected void _submitButton_Click(object sender, EventArgs e) {

24 var client = new PondServiceClient();

25

26 try {

27 _pondStatusMessage.Text = _pondNameTextBox.Text +

28 (client.IsFrozen(_pondNameTextBox.Text)

29 ?

30 " is frozen."

31 : " free of ice.");

32 client.Close();

33 }

34 catch (CommunicationException ex) {

35 // Handle exception

36 client.Abort();

37 }

38 catch (TimeoutException ex) {

39 // Handle exception

40 client.Abort();

41 }

42 catch (Exception ex) {

43 // Handle exception

44 client.Abort();

45 }

46 }

In all exception handlers I call client.Abort() to cancel the connection. In a real application I would also present a message to a user along the lines “Cannot answer your request as a required service is temporarily not available. Please try again later.”

How about using a using(var client = new PondServiceClient()) contruct:

49 protected void _submitButton_Click(object sender, EventArgs e) {

50 using(var client = new PondServiceClient()) {

51 _pondStatusMessage.Text = _pondNameTextBox.Text +

52 (client.IsFrozen(_pondNameTextBox.Text)

53 ?

54 " is frozen."

55 : " free of ice.");

56 } // Dispose will be called here.

57 }

Dispose will be called at the end of the using-block. However, this code doesn’t handle the exceptions yet that you might encounter in the invocation of the service. Furthermore, an exception can also happen when Close() is called from Dispose(). How would you handle those exceptions? The using-construct would lead to very ugly code. For a more detailed discussion see the references at the end of this blog.

This concludes this step of the blog post series on WCF services. There will be at least one more on how to propagate error conditions from a service to a consumer.

Update 09 April 2012: In a newer blog post I’m discussing various options for simplifying the client side. The discussion includes ways for handling everything according to Microsoft’s recommendations while at the same time avoiding code duplication.

References

Some examples for WCF client code that doesn’t close the connection to the service:

It is correctly mentioned and demonstrated at: